IMG SERIES4

Beyond next-gen AI

The automotive revolution

Multi-core scalability and flexibility

Ultra-low latency

Leading safety mechanisms

Incredible performance

A key metric for determining neural network performance is maintaining high levels of utilisation with tera operations per second (TOPS). IMG Series4 outperforms other solutions on the market by an order of magnitude, with industry-leading performance metrics, thanks to its multi-core scalability.

A single IMG Series4 core in 7nm can deliver up to 12.5 TOPS per core at 1.2GHz and can be arranged in a cluster of 2, 4, 6, or 8 cores that can be laid down in multiple clusters. An 8-cluster core is 100 TOPS, and six of these on a single SoC would result in 600 TOPS.

Depending on configuration, the IMG Series4 is more than 100 times faster than a GPU for AI acceleration and 1000 times faster than a CPU.

100x

Faster than a GPU

1000x

Faster than CPU

Imagination and Visidon have partnered to power the transition to deep-learning-based super resolution for embedded applications across mobile, DTV and automotive markets

Find out more“Power efficiency and high performance are two of the main pillars that Visidon builds its solutions on. We were surprised to see our deep-learning networks run so easily on Imagination’s IMG Series4 NNA and we were impressed with the overall power efficiency maintained while processing high compute workloads. We look forward to our partnership evolving as we unlock the future of AI-powered super resolution technology together.”

CTO

Power and bandwidth efficiency

IMG Series4 provides exceptional bandwidth efficiency. Tensor processing necessitates going out to memory and back. This consumes memory bandwidth and consumes power.

The patent-pending Imagination Tensor Tiling (ITT) technology, new for IMG Series4, solves this problem. Tensors are efficiently packaged into blocks, which are then processed in local on-chip memory. This significantly reduces data transfers between network layers, reducing bandwidth by up to 90%.

Advanced Signal Processing and FFT

Neural networks (NNAs) enable audio and signal processing tasks that would otherwise be performed elsewhere in the system to be performed on the same device as the neural network. This reduces overall bandwidth, power, and latency requirements for processing tasks such as image and audio processing.

Imagination’s automotive solution

GPU

NNA

IMG Series4 core families

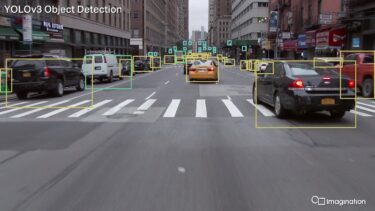

The next-generation neural network accelerator (NNA) IMG Series4 is ideal for advanced driver-assistance systems (ADAS) and autonomous vehicles like robotaxis. The cores include advanced technical features such as Imagination Tensor Tiling, advanced safety mechanisms, and intelligent workload management.

IMG 4NX-MC1

The ideal single core solution for neural network acceleration.

IMG 4NX-MC2

The leading dual-core solution for neural network acceleration.

IMG 4NX-MC4

The mega performance quad-core solution for neural network acceleration.

IMG 4NX-MC6

The super-high performance hexa-core solution for neural network acceleration.

IMG 4NX-MC8

The ultra-high performance octa-core solution for neural network acceleration.

Webinar

Download the IMG Series4 overview

IMG Series4 is a ground-breaking neural network accelerator for the automotive industry to enable ADAS and autonomous driving.

What is the IMG Series4 NNA?

IMG Series4 is a ground-breaking neural network accelerator for the automotive industry to enable ADAS and autonomous driving. Find out why IMG Series4 fulfils the remit needed for large scale commercial implementation and why it is becoming the industry-standard platform of choice for the deployment of advanced driver assistance and self-driving cars.

Download overviewIMG 4系列NNA是汽车行业具备突破性的神经网络加速器,可实现 ADAS 和自动驾驶。

下载产品概述