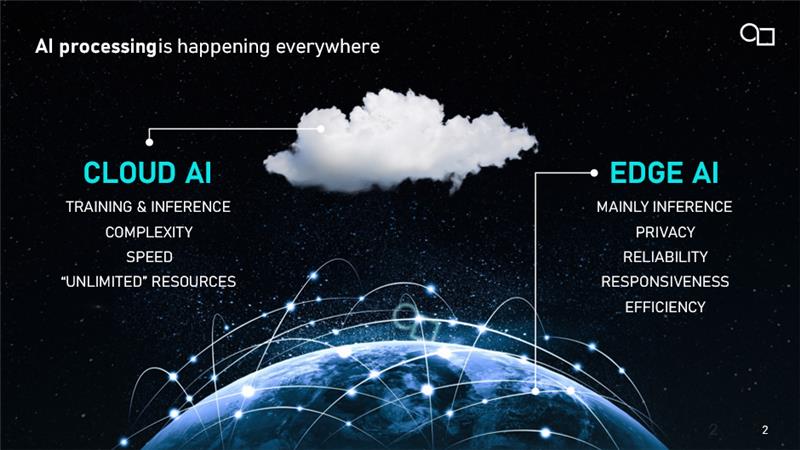

EDGE AI vs CLOUD AI

Edge AI vs Cloud AI is one of the most important distinctions in modern artificial intelligence. Both approaches offer unique advantages, and understanding their differences is essential when designing scalable, efficient, and responsive AI systems.

WHAT IS CLOUD AI?

Cloud AI refers to artificial intelligence processing that occurs in large, centralised data centres. It is most commonly used to train complex machine learning models and handle heavy inference workloads that require vast compute power. Developers often rely on cloud-based AI services because they offer near-unlimited scalability, access to high-performance GPUs, and consistency across devices. Once models are trained, the cloud can also be used to serve AI inference, where a pre-trained model receives a user’s input and returns a prediction or result, often via an internet-connected app or service.

WHAT IS EDGE AI?

In contrast to Cloud AI, Edge AI moves that AI inference process directly onto the device. Whether it’s a smartphone, a self-driving car, a surveillance camera, or a wearable. Instead of sending data to a remote server, AI processing happens locally and in real time. This approach significantly reduces latency, improves data privacy, and lowers power consumption by eliminating the need to transfer large datasets to and from the cloud. Edge AI has become increasingly viable thanks to advances in low-power AI processors, neural processing units (NPUs), and efficient edge inference models.

For a deeper dive on “What is Edge AI?“, you can view our other article linked here.

With AI applications now permeating everything from industrial automation to personal health tracking, the conversation of “Edge AI vs Cloud AI” is more relevant than ever. Let’s explore how they compare across performance, efficiency, scalability, security, and ideal use cases, so you can choose the right architecture for your next AI deployment.

Before we go into further detail on what sets Edge AI and Cloud AI apart, their benefits, weaknesses, and more, below is a snapshot of the key differences between Edge AI and Cloud AI.

| Edge AI | Cloud AI | |

|---|---|---|

| Latency | Ultra-low (on-device) | Higher (network dependent) |

| Connectivity Required | No | Yes |

| Data Privacy | High (local processing) | Moderate (data leaves device) |

| Compute Power | Limited (needs efficiency) | Scalable |

| Key Use Cases | Wearables, robotics, smart homes | Training LLMs, analytics, SaaS apps |

EDGE AI vs CLOUD AI: KEY APPLICATION AREAS

EDGE AI

Vehicle Autonomy: By performing inference at the edge, self-driving cars can interpret sensor data and make critical driving decisions instantly, without relying on cloud communication.

Robotic Devices: Robots use Edge AI to understand and move around their environments and to communicate with the people around them.

Smart Homes: Smart speakers are loaded with advanced AI algorithms so that they can detect voices and interpret meaning. Other consumer electronics in the home can be used to optimise resources.

Wearables: Even small devices like smartwatches now benefit from AI at the edge, interpreting sensor data locally to deliver real-time, privacy-focused feedback.

More information on where Edge AI is used, can be found here.

CLOUD AI

Medical Imaging: Advanced computer vision techniques are helping doctors detect anomalies in diagnostic images faster and more accurately.

Content Creation: The latest generation of natural language processing models and image creation software has transformed the face of content creation, giving everyone the power to be a great wordsmith or designer.

Cybersecurity: AI is helping to protect businesses from IT threats by analysing the flow of internet traffic and detecting anomalies.

AI Assistants: Increasingly common in businesses, AI assistants can create accurate minutes from meetings, polish an email, and up skill employees in a short space of time.

EDGE AI vs CLOUD AI: BENEFITS

EDGE AI

Resilience: Because all data processing takes place on the device, Edge AI operates even without a stable connection to the internet.

Low Latency: Transmitting data to and from a central cloud server takes time. Edge AI reduces latency by enabling AI processing on the device, eliminating the need for round-trips to the cloud.

Privacy: Transmitting data into third-party cloud services introduces an element of risk that for some types of information (financial, healthcare) are unacceptable.

Efficiency: Data transmission costs power, and while cloud AI models are more capable than most Edge AI models, they also consume a lot more energy.

CLOUD AI

Performance: Cloud-based AI solutions have access to immense computing resources, enabling high-speed applications and large-scale inference with powerful server infrastructure.

Accessibility: Cloud services are available to anyone with an internet connection, whereas Edge AI does require a device with the right hardware.

EDGE AI vs CLOUD AI: LIMITATIONS

EDGE AI

Performance: Edge AI devices have less computing power, and the models need to be smaller than cloud equivalents, which can impact experience speed and quality.

CLOUD AI

Power consumption: Cloud AI requires a lot of energy. In USA, 2023 data centres are said to have consumed over 4% of US electricity. Simply doing a couple of queries on a large language model can consume the equivalent energy of an entire smartphone charge.

Cost: With pricing starting at about $8/day for access to a single entry-level GPU, operating costs can start to add up for businesses using cloud services for their AI applications.

EDGE AI vs CLOUD AI: HARDWARE COMPARISON

EDGE AI

Heterogeneous: Edge AI typically runs on an SoC that hosts a diverse range of accelerators (CPUs, GPUs, NPUs). AI laptops in 2025 are delivering about 75 TOPS from CPU, GPU, and NPU.

Diverse: The edge device ecosystem is incredibly diverse, and software developers must contend with different form factors, processors, and architectures.

CLOUD AI

Homogeneous: Historically cloud infrastructure has been CPU-based, but GPU-accelerated services are now growing in popularity to support AI and graphics applications. Customers can choose a platform and spread their application on multiple (identical) instances).

Few Vendors: You can count on one hand the number of companies supplying hardware into cloud systems.

EDGE AI vs CLOUD AI: SOFTWARE & ARCHITECTURE

EDGE AI

Small and Efficient: Edge AI models tend to be smaller than 10Bn parameters and optimised with techniques like sparsity and quantisation to deliver accurate results with lower power consumption.

CLOUD AI

Large and Accurate: Cloud AI models have started breaking the trillion-parameter mark and algorithmic innovations like self-attention mechanisms massively improving accuracy.

While Edge AI and Cloud AI each offer distinct advantages, many AI deployments are now embracing a hybrid AI architecture. But what is Hybrid AI?

HYBRID AI: COMBINING THE BEST OF EDGE AI & CLOUD AI

Hybrid AI is a solution that combines the best of both worlds. By leveraging Edge AI for real-time, on-device processing and Cloud AI for large-scale data training, storage, and model updates, hybrid systems can deliver optimal performance, responsiveness, and scalability.

For example, in a smart city deployment, traffic cameras may use AI at the edge to detect incidents or congestion in real time. That data can then be sent to the cloud for broader trend analysis, historical archiving, or model retraining. Similarly, a wearable medical device may rely on inference at the edge to flag irregularities instantly, while syncing with cloud-based AI solutions to provide a complete picture to healthcare providers.

This hybrid edge-cloud AI model is especially useful in industries where low latency, data privacy, and AI processing on-device are essential, but where long-term insights and model improvement still depend on powerful cloud infrastructure.

With the rise of powerful AI processors for edge devices and growing availability of cloud AI services, hybrid AI is becoming a practical and scalable standard. It enables developers to deploy AI in bandwidth-constrained environments while still benefiting from the full processing muscle of the cloud when needed.

As AI continues to evolve, expect hybrid AI strategies to play a central role in future architectures. Especially in sectors like automotive, robotics, IoT, and industrial automation.

Here at Imagination, we develop top-class AI processors for edge devices, purpose-built to deliver efficient, scalable intelligence across industries, from automotive to IoT. Find out more about our latest generation of Edge AI technology.