- 10 October 2018

- Benny Har-Even

After a period out of the spotlight, ray tracing technology has recently come back into focus, taking up a lot of column inches in the tech press. The primary reason is because graphics cards for the PC gaming market have been released from NVIDIA that support groundbreaking graphics technology.

It’s still early days, and even the PC GPU giant didn’t have games at launch, proving again the difficulty of creating an eco-system around new technology. Once the puzzle has been completed, consumers will see the full picture and will want to see ray-traced graphics on all their devices.

Imagination was the first to make ray tracing technology a practical reality. Where our approach differed is that it was designed from the ground up for deployment on embedded hardware within a strict power envelope. In other words, Imagination doing what it does best – making cutting-edge technology work efficiently.

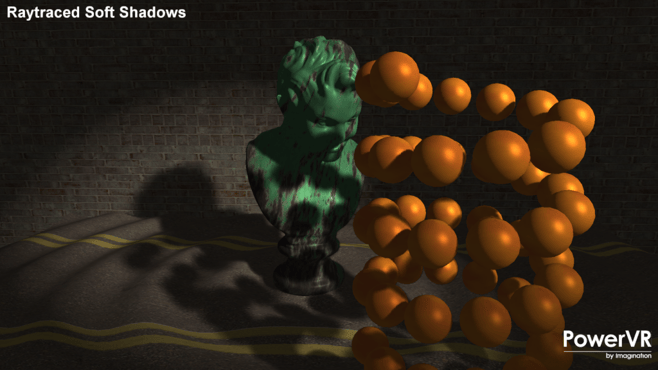

In any article about ray tracing the phrase is quickly followed by the term ‘holy grail’ – as in something that has been long sought after but has always seemed just out of reach. However, we first talked about our ray tracing IP back in 2012, and in 2014 this was followed by the launch of our ray tracing GPU family, a GPU featuring a block dedicated to accelerating ray tracing. This was intended for use in mobile hardware, but for demonstration and ease of development purposes, we had the chip integrated into a PCIe evaluation board, which was running by 2016.

Today, PowerVR ray tracing technology is available as a licensable architecture capable of enabling stand-alone ray-tracing processors or hybrid ray tracing/rasterization devices.

So, what is all the fuss about?

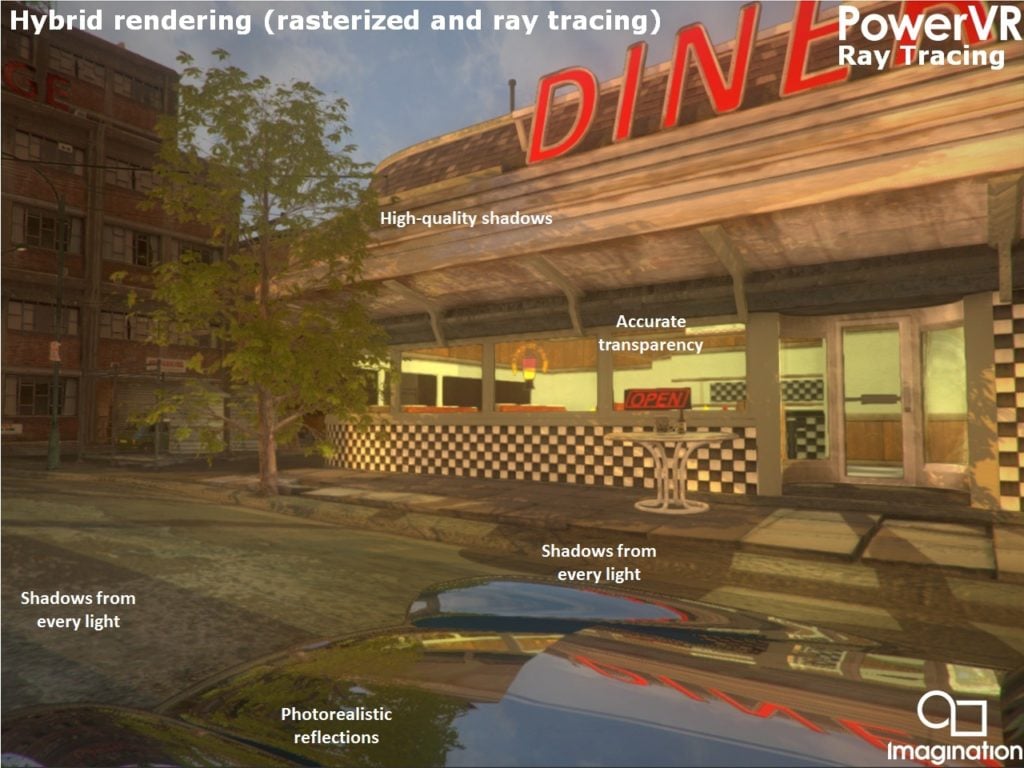

Let’s then quickly recap why ray tracing is considered a big deal. If you look at any 3D graphics scene, the level of realism is highly dependent on the lighting. In traditional graphics rendering known as rasterization, light maps and shadow maps are pre-calculated and then applied to the scene to simulate how the scene should look. However, it is, at best, a poor simulation.

Ray tracing is different. It mimics how light behaves in the real world.

In real life, a virtual beam of light travels from the source – say the sun if it’s outside – or light inside a room onto an object. The light will then interact with that object and depending on the surface properties of that object, will then bounce onto another surface. The light will then carry on bouncing around, creating light and shade.

In ray tracing on a computer, or more accurately path tracing, the process is reversed from how light travels in the real world. The light is virtually emitted by the camera viewpoint onto objects in the scene, and algorithms calculate how that light would interact with the surfaces, and then trace that path onto each object back to the light source. The result will be a scene that is lit just like it would be by the sun in the real world: with realistic reflections and shadows.

Traditionally it’s not been possible for a computer device to do this as the computational load was too high, instead of relying on rasterization to ‘cheat’.

Of course, while we haven’t had it in games, we’re all familiar with how good ray tracing can look. You’ll have seen the results in every 3D animated movie, with fantastic looking characters and scenes with photo-realistic quality. However, these scenes take months to render on dedicated server farms, which doesn’t quite work for games, which must be generated in real-time at a minimum of 30 frames per second.

As discussed, this has never been possible before is because of the huge computational cost involved but Imagination changed the game with a hybrid approach that combined the speed of rasterization with the visual accuracy of ray tracing.

Making ray tracing happen

If you want to learn more about how we were able to do this; integrating shadows, reflections and refractions into a traditional raster game engine pipeline; then you’ll want to read the linked post. The differences were also discussed in detail in our blog post, Hybrid rendering for real-time lighting: ray tracing vs rasterization – it’s fascinating and certainly worth a read to see the differences between the two techniques.

Of course, new hardware and new techniques are nothing without API support, and to that end, Imagination created OpenRL as an API for ray tracing. We later added extensions for ray tracing to OpenGL ES™ and finally for the Vulkan® API too.

Hybrid ray tracing has now been implemented into Microsoft’s DirectX 12, a move that has paved the way for the brand-new desktop PC cards.

Efficiency vs brute force

A key difference, of course, is that while the power consumption of a desktop NVIDIA RTX card has been measured drawing 225W while gaming, our solution was designed specifically with mobile power envelopes in mind. Our chip operates at just two watts and the demo board at around 10 watts. What’s more, this was this was built using older 28nm process technology, demonstrating how our solution operated at an order of magnitude of lower power. The peak rate was 300MRay/sec, which considering the power envelope compares favourably with the new NVIDIA cards.

We believe that heat and power consumption will be critical in the long-term for ray traced devices. Ray tracing powered by dedicated fixed-function hardware will be far more efficient than doing so using traditional rasterization or general compute hardware. With both bandwidth efficiency and superior quality, our unique high-efficiency focussed approach is a win-win.

And while AR and VR are yet to break into the mainstream there is still a lot of belief out there that they will eventually do so. When it comes to VR, to keep everything smooth, techniques such as variable sample rates and foveated rendering need to be employed and these are easier too with our hybrid ray tracing.

Taking mobile ray tracing mainstream

To reach that mainstream, it’s only logical that we will have to create devices that are cordless and lightweight to make using them a pleasant experience. Take this experience from an MIT journalist who attended Facebook’s Oculus conference via a VR headset. The Quest is cordless, but she was ultimately frustrated by the poor battery life of the device. Our focus on power efficiency would be key here.

And how great would it be to have ray traced games on your mobile? Personally, I’m partial to the game CSR2; a simple racing game where the big draw is how real the cars look, with shiny, reflective surfaces. A lot of this is down to the use of physically-based rendering, (we discussed how great this runs on PowerVR hardware back in 2016), but how incredible would the cars look with true ray traced reflections?

CSR2 also touches on AR: enabling you to use the camera to place virtual cars into the real world: either on a small scale on a table in the room or full-size outdoors. When I virtually placed a Corvette in my own back garden and showed the screenshot to colleagues it was interesting to note that they were fooled for a brief second, before realising that it wasn’t real – and more than anything it was the lighting that gave it away. Now imagine your smartphone being able to analyse the light in the camera view and taking that into account when rendering the virtual object – augmented reality taken to the next level.

Equally, it could have an impact on automotive. As we have discussed, many cars now show a representation of the car in relation to the data from the surround cameras. Using ray tracing, the lighting could be taken into account, making the car on the dashboard appear much more life-like and making obstacles easier to judge.

Ready with ray tracing IP

The upshot then is that we are very excited that ray tracing is back on the agenda. While ray tracing has been bubbling under, once people see it running on the PC they are certainly going to want it, and indeed expect it, on their mobile devices, VR headsets and consoles.

We have many years’ experience creating ray tracing technology and are ready to have discussions with interested parties to discuss the various ways our IP can bring ray tracing to market in power-constrained devices, in the smartphone, VR/AR, console and automotive markets.

If you want to build next-generation graphics hardware in an energy efficient and cost-effective way, then you need to talk to the experts in power efficiency. Working together, we will not only be able to finally grasp the ‘holy grail’ of graphics but deliver it to an eager audience that’s ready for 3D graphics to move on to the next stage of immersion and realism.